Common Learning Outcomes

Program Learning Outcomes

Academic Program Design

|

Academic Program Quality

Academic Integrity

|

Category One OverviewAs a technical institute with a mission of educating for employment, Southeast Tech places students at its center. From the moment a student applies for a program to graduation and employment, Southeast Tech designs processes for learning and support which will lead to the greatest student success. To that end, Common Learning Outcomes (CLO’s) and Program Learning Outcomes (PLO’s) have been identified and regularly assessed in alignment with the Institute’s mission. Academic programs are intentionally designed to ensure that Southeast Tech is responsive to business, industry and community needs and meets the standards and rigor for each discipline. Co-curricular activities directly align with program areas and reinforce learning outcomes. The Institute’s internal customized Planning and Assessments database facilitates the capture and analysis of program and Institutional assessment results. A Celebrating Learning Team coordinates assessment activities related to CLO’s and PLO’s, assisting and coaching faculty and staff on a regular basis.

Southeast Tech considers the majority of its processes in Category 1 to be integrated, with repeatable and documented processes, results, and improvements arising from the use of data and other inputs.

While data associated with the Institute’s teams and departments have been available for years on the STInet internal website, and faculty and staff have been charged with referencing and using the data to inform planning and budget, data collected had not always been used to its full effect. The use of data varied according to area, with some using the data more effectively in decision-making than others.

However, with the implementation of the Institute’s new Strategic Plan, and the development of an improved Annual Planning process (4P 2 ) that incorporates Institutional data directly into the budgeting and annual planning process, the Institute believes it now has processes that fully integrate its Helping Students Learn processes to the rest of the Institute’s processes, assuring that data is used more effectively in decision-making and informing change. The new process assures that performance levels are regularly reviewed and compared to targets, that discussions are held on what actions will achieve the desired target levels, and that budget allocations are made to meet the needs of the Institute. With this new Strategic Plan and Annual Planning process in place, Southeast Tech believes its results maturity level for Category 1 are at the integrated level.

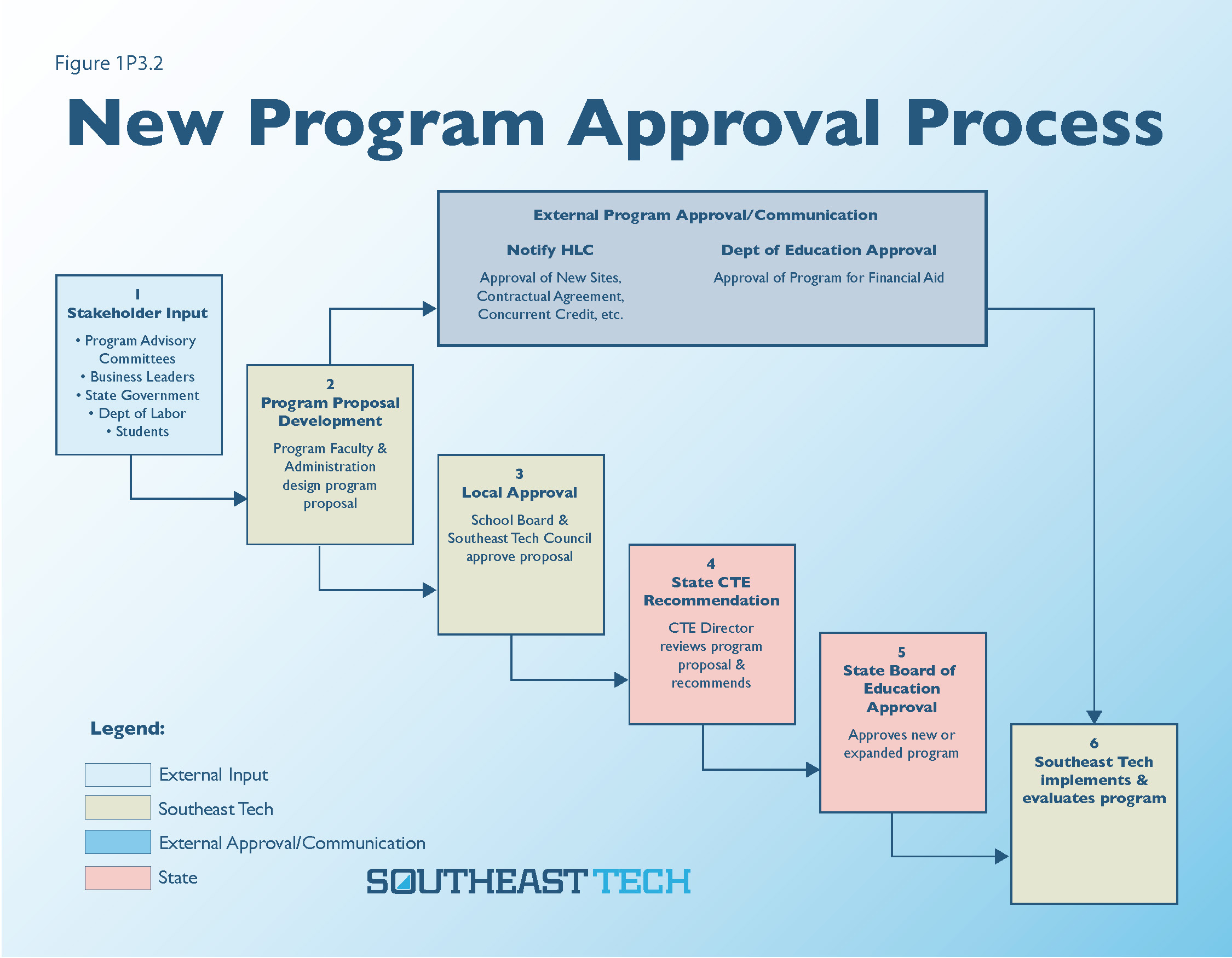

Academic Program Design is a formal process involving the State Department of Education, external stakeholders, and administration and faculty initiating program and curriculum design and improvements. A standing Curriculum Committee ensures thorough review and vetting of new courses and programs as well as the modifying and changing of existing courses and programs. Southeast Tech believes this process is at an integrated maturity level.

Academic Student Support is one of the strengths of the Institute as noted by the 2012 Systems Appraisal. Comprehensive Student Services include a renovated Library/Student Success Center, Adult Learning Center, and centralized support services. The Institute believes it is currently at an integrated maturity level for academic student support.

Academic Integrity is incorporated into Southeast Tech’s policies and procedures with a specific, communicated process for the reporting, investigation, and implementation of final decisions regarding integrity complaints or concerns. The Institute believes its processes are at the aligned maturity level.

1P1 Common Learning Outcomes

Common Learning Outcomes focuses on the knowledge, skills, and abilities expected of graduates from all programs. Describe the processes for determining, communicating, and ensuring the stated common learning outcomes and who is involved in those processes. This includes, but is not limited to, descriptions of key processes for:

- Aligning common outcomes to the mission, educational offerings, and degree levels of the institution (3.B.1, 3.E.2)

- Determining common outcomes (3.B.2, 4.B.4)

- Ensuring the outcomes remain relevant and aligned with student, workplace, and societal needs (3.B.4)

- Articulating the purposes, content, and level of achievement of the outcomes (3.B.2, 4.B.1)

- Incorporating into the curriculum opportunities for all students to achieve the outcomes (3.B.3, 3.B.5)

- Designing, aligning, and delivering co-curricular activities to support learning (3.E.1, 4.B.2)

- Selecting tools/methods/instruments used to assess attainment of common learning outcomes (4.B.2)

- Assessing common learning outcomes (4.B.1, 4.B.2, 4.B.4)

Common Learning Outcomes Process: A Brief History

Common Learning Outcomes (CLO’s) have been aligned to the mission, educational offerings and associate degree levels of Southeast Tech since their inception in 1995, with assessment occurring at the program level. CLO’s were directly tied to Southeast Tech’s mission of educating for employment by addressing the question, “What skills do employers of Southeast Tech’s graduates expect?” When the Institute moved toward an Institutional-level CLO assessment, the original set of outcomes were based on the 2000 Department of Labor SCANS Report, employer surveys of graduates, faculty and Program Advisory Committee input, and CLO’s at peer institutions. The twice-yearly Program Advisory Committee meetings provided feedback and input from businesses and industry regarding outcome relevancy to industry needs and helped to assure that curriculum reinforces these outcomes. The CLO’s were reviewed and revised from eight to four outcomes in 2005. In 2014, the term for the outcomes was changed from “Broad Student Outcomes” to “Common Learning Outcomes” to reflect common terminology in higher education. (3.B.1), (3.B.2)

Aligning Common Outcomes to the Mission, Educational Offerings, and Degree Levels of the Institution AND

Determining Common Outcomes

The formal process for determining and assuring alignment of CLO’s occurs during the first year of implementation of any new strategic plan. During each Strategic Planning process (4P 2 ), Southeast Tech reviews its mission, vision and values and sets the future direction of the Institute. Because any changes to the mission, vision, values or Institute direction could impact the CLO’s (and therefore program learning outcomes, outcome matrices and lesson plans) the Institute assures that a formal review of CLO’s always occurs after the Strategic Planning process is completed (Figure 1P1.1).

Southeast Tech then gathers input (capture) on CLO’s by engaging stakeholders in the CLO review process. Both internal and external stakeholders are given the opportunity to provide input through surveys, Advisory Committee meetings, and focus groups. The Celebrating Learning Team (CLT), with the help of the Institutional Research Office, collects the data, analyzes it, and determines a course of action, which may include new CLO’s or revisions to current CLO’s (develop). Because Southeast Tech believes that the General Education department is integral to the development of CLO’s, imparting broad knowledge and intellectual concepts to all students, members of that department are included in the CLO review.

The recommendation of the CLT is sent to Southeast Tech stakeholders (both internal and external) for further review and modification. Once completed, the CLT prepares a final report on the CLO’s and presents the report to Southeast Tech’s Administrative Team for approval, then to the Southeast Tech Council and Southeast Tech Board for adoption (decide). The Academic Administrative Team then works with faculty and academic staff to integrate the new CLO’s into the curriculum, and the Vice President of Student Affairs and Dean of Students work with departments to communicate the new CLO’s to all Southeast Tech stakeholders (deploy). (3.B.2), (4.B.4)

Southeast Tech’s most recent strategic planning process was completed in Summer 2016 and approved in Fall 2016, and a new mission statement was developed, setting the stage for a new CLO cycle review in 2016-2017. The CLT is currently gathering input on the CLO’s from the Institute’s stakeholders, which will be used in Summer 2017 to determine any CLO adjustments or additions.

Southeast Tech’s requirement of a minimum of 15 general education credits for all AAS degrees (five to nine credits for diploma programs), including courses in composition, mathematics, sociology, and psychology, assures students are engaged in curriculum that helps build student skills in collecting, analyzing, and communicating information and developing the ability to succeed in an ever-changing world. General Education faculty function as a coherent team, meeting monthly to address areas such as online learning, curriculum, and student success, and are instrumental in the development of student general education course placement and transfer guidelines. (3.B.1)

Ensuring Common Learning Outcomes Remain Relevant and Aligned to Student, Workplace, and Societal Needs

Once established/revised, the CLO’s are reviewed annually during team and program meetings and with Program Advisory Committees. Additionally, the AQIP Education Design and Delivery and AQIP Celebrating Learning teams discuss the appropriateness and relevance of the CLO’s as part of the CLO assessment process (evaluate) (1P1 Selecting Tools and Targets, and Assessing Common Learning Outcomes). Included in that review are results from the Southeast Tech Employer Survey, which is conducted every other year and provides feedback from employers on graduate skill level attainment for various areas, including the CLO’s. The survey includes the opportunity for employers to provide feedback on additional skill areas they believe graduates may be missing or should be enhanced, which is reviewed for possible adjustments to the CLO’s by the Celebrating Learning and Education Design and Delivery teams. CLO adjustments may be recommended by these two teams and approved by the Administrative Team. Should these annual processes reveal a need to review the CLO’s at a more significant level prior to the next Strategic Plan, the President has the authority to begin the formal process review at any time (reflect).

Because the respect for diversity is one of Southeast Tech’s core values, awareness of the human and cultural diversity of a global society is incorporated into the Professionalism CLO and is included in instruction as learning outcomes within the Social Issues general education course, individual program coursework, and the Student Success Seminar course. Diversity events, activities, and/or awareness campaigns are provided annually. (3.B.4)

Articulating Purpose, Content, and Level of Achievement of Common Learning Outcomes

Southeast Tech has established the following four CLO’s:

Science & Technology: Technical competence including knowledge of technology and/or scientific principles as these apply to programs.

Problem Solving & Critical Thinking: The ability to select and use various approaches to solve a wide variety of problems – scientific, mathematical, social and personal. Graduates will also be able to evaluate information from a variety of perspectives, analyze data and make appropriate judgments.

Communication: The ability to communicate effectively in several forms – oral, written, nonverbal and interpersonal. Graduates will also demonstrate knowledge of how to manage and access information.

Professionalism: Strong work ethic, including responsible attendance; skill in teamwork and collaboration, as well as an ability to work with others, respecting diversity; ability to adapt to change; commitment to lifelong learning; adherence to professional standards; and positive self-esteem and integrity.

The assessment methods used to determine achievement of these outcomes is provided in 1R 1 . (4.B.1)

Southeast Tech articulates the CLO’s and their purpose and content through (publish):

- General Education mission and purpose statements

- Standardized syllabi template, including CLO’s

- Posters in classrooms

- Bookmarks in the Bookstore

- Online Southeast Tech Catalog

- CLO assessment results published in this portfolio (3.B.2)

Incorporating into the Curriculum Opportunities for All Students to Achieve the Outcomes

All programs provide multiple opportunities for students to practice and learn the CLO’s. Each program has:

- Aligned its specialized program outcomes (PLO’s) to the CLO’s through a published chart showing program outcomes within each CLO;

- Developed a Course Mapping Matrix that indicates the method (standardized test, performance test, portfolio, true/false or multiple choice test, etc.) used to assess the CLO’s by course;

- Developed a Program Mapping Matrix that indicates in which course PLO’s are taught and to what level (introductory, reinforcement, mastery) in order to assure curriculum is designed effectively to build skills and assure that all PLO’s are covered to the level expected of graduates.

Since PLO’s directly relate to CLO’s, this process also helps assure that the CLO’s are covered and mastered accordingly.

General education at Southeast Tech reinforces the teaching of the CLO’s through the core coursework required for diplomas and degrees. General education functions as an integral but complementary component of the AAS and diploma programs and is designed as a coherent core of courses taught by qualified faculty in the General Education Division (3P 1 ). Since CLO’s are the skills all program graduates are expected to achieve by the time they graduate, these outcomes are integrated and assessed within each program as well as through the general education coursework. Program mapping matrices assure that content introduces, reinforces, and provides mastery opportunities for student achievement of CLO’s in a structured building-block approach. Review of these matrices provides for continued improvement of this process and opportunity for students to achieve the CLO’s. (3.B.3, 3.B.5)

To assure these linkages are further strengthened, General Education faculty participate on program Advisory Committees. This regular participation helps assure strong General Education/Program faculty collaboration, relevancy of general education courses to business and industry requirements and standards, and a positive model that encourages student understanding of the value general education plays in their learning. (3.B.1, 3.B.2, 3.B.3, 3.B.5)

Designing, Aligning, and Delivering Co-curricular Activities to Support Learning

Students at Southeast Tech have the opportunity to participate in various professional student organizations, primarily linked to career areas. These organizations promote leadership, field exposure, opportunities to attend state, regional and national conferences and competitions, and networking opportunities with employers. Currently, Southeast Tech has sixteen student program organizations (see 1P 2 for listing). In addition to these organizations, Southeast Tech’s Student Government Association (SGA) provides students the opportunity to build leadership skills while providing student input into the planning and operations of the Institute. SGA is composed of two members from each program and is responsible for the development and implementation of campus student activities and campus events such as movie/pizza night, talent shows, dances, and holiday celebrations. (3.E.1)

To form a student organization or club, students must complete an application with the Student Activities Coordinator and develop a set of by-laws. All student organizations must be directly linked to a program of study. Student clubs can be developed around student interest areas, such as music, art, gaming, etc. Prior to approval, both organizations and clubs must have at least one Southeast Tech employee assigned as an advisor. All clubs and organizations must have a minimum of five active members. (3.E.1)

Each year both organizations and clubs are required to participate in a minimum of one service learning event. To assure that this requirement is met, Southeast Tech will be piloting an annual reporting process in 2017-2018, submitted to the Student Activities Coordinator, that details the service learning event, participation, and final outcomes. The report will also provide details on other organization/club accomplishments and activities and how the group reinforces CLO or PLO development. The report will then be made available to Southeast Tech stakeholders on STInet and incorporated into future planning and budget through the Annual Planning process (4P 2 ). (3.E.1)

Once a student organization or club is established, it is allowed to continue into the next year as long as: 1. the by-laws are up-to-date; 2. an advisor is established; and 3. membership is kept at five or more students. (4.B.2).

Selecting Tools and Targets AND Assessing Common Learning Outcomes

A Brief History on CLO Assessment: The initial tools, assessment targets, and timetable for measuring the CLO’s was developed by Southeast Tech’s former Assessment Coordinator and Assessment Committee. From 2005-2010, a Writing Across the Curriculum assessment was implemented to measure the CLO of written communication. Programs from across the Institution submitted student writing samples, which were rated in the summer by a team of English faculty. Assessment of the technology, problem-solving, and professionalism CLO’s was left up to the program teams with assessments conducted at the program level on an annual basis. In 2012, the Celebrating Learning Team (CLT) was formed to develop a more Institutional-wide assessment process.

The CLT developed and implemented a problem solving assessment in 2013, which collected student work on problem solving from programs across the Institute. The problem-solving assessment was repeated in 2014 after a CLT review and revision of the process. In 2015-2016, the CLT developed and conducted an Institutional professionalism assessment pilot (Figure 1P1.2). The CLT developed and implemented a problem solving assessment in 2013, which collected student work on problem solving from programs across the Institute. The problem-solving assessment was repeated in 2014 after a CLT review and revision of the process. In 2015-2016, the CLT developed and conducted an Institutional professionalism assessment pilot (Figure 1P1.2).

Current Tool Selection and Assessment Process: The Celebrating Learning Team has been delegated the responsibility for overseeing Southeast Tech’s formal CLO assessment process. Team membership includes an Academic Administrator, a member of the campus Institutional Research Office, and faculty and staff representing programs and departments from across campus. The CLT determines the methods used to assess the CLO’s at the institutional level and creates rubrics or other measures for CLO’s. Measures are developed to allow Southeast Tech to gauge Institutional-level CLO achievement while at the same time are flexible enough to be individualized at the program level to meet varying industry requirements. The CLT determines which CLO’s will be measured in what year (Figure 1P1.1 and 1P1.2) and establishes Institutional assessment targets, which are then approved by the Administrative Team. (4.B.1, 4.B.4)

Throughout the process of selecting assessment tools, setting assessment targets, and developing and deploying CLO assessments, the CLT seeks direction and input from faculty and staff at all-campus monthly meetings, in-services, through email requests for suggestions, and through direct discussions with CLT members. Prior to any assessment selection or implementation, the process is approved by the Southeast Tech Academic Administrative Team. The CLT members function as coaches for faculty and staff, helping them throughout the process of creating and implementing CLO assessments, as well as helping programs to develop action plans based on assessment results. (4.B.4)

CLO’s are assessed on a rotational schedule established by the CLT (Figure 1P1.1). Southeast Tech generally assesses CLO’s during the spring semesters and requests that programs assess those students who are closest to graduating. Each program is responsible for conducting and submitting results of the CLO assessment within their program area. The CLT provides assistance in this process as needed and guides faculty in the completion of the assessment. (4.B.1)

Once the assessment results are collected, the assessment data is aggregated by the Institutional Research Office and reviewed by the CLT during the Team’s summer retreat. Aggregated and disaggregated-by-program data are stored in Southeast Tech’s Planning and Assessments database and are available for review by all Institutional employees on the STInet intranet site. An Institutional assessment report is created, and overall Institutional and individual program results are presented at employee meetings and/or posted on the Institutional STInet site to provide access to all employees. The assessment report includes an analysis of the overall student strengths and weaknesses as related to the CLO’s and provides suggestions for improvement or further analysis by program and general education faculty. Program faculty then use their individual program results for program improvement and review by Advisory Committees (1P 2 ). Overall results are posted within the Systems Portfolio for external stakeholder access (1R 1 ).

Along with direct assessment of CLO achievement, Southeast Tech also uses its Job Placement and Employer Survey results to assess student overall achievement. Placement rates and Employer Survey results, which include questions directly related to CLO achievement, are located in 1R 1 and provide indirect evidence that the Institute is meeting its CLO assessment objectives. The CLT analyzes the data from both of these sources with the direct assessment data as part of its review of graduate academic achievement of CLO’s.

At the Institutional level, the CLT reviews the overall results and determines what actions, if any, are necessary for improvement. The CLT then implements actions and makes recommendations to Southeast Tech administration for those actions needing administrative approval or support (4.B.1, 4.B.2, 4.B.4).

Southeast Tech defines four Institutional student learning outcomes and measures these outcomes both at the Institutional and program levels:

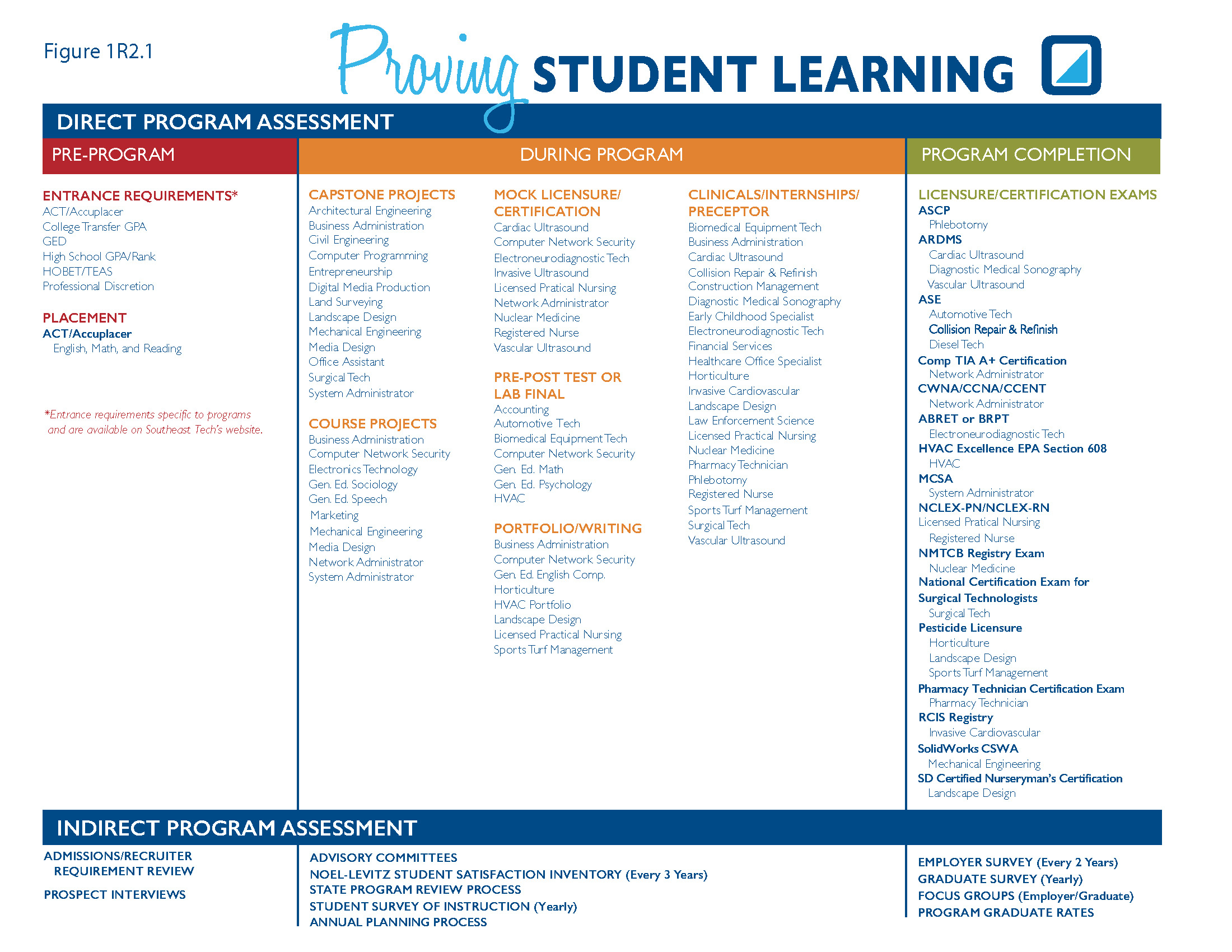

Science and Technology

Program areas define specific measurements and targets that meet the technical standards required of their industry (1R 2 Figure 1R2.1 Proving Student Learning Chart). To document the achievement of an Institutional-level Science and Technology Outcome, each program runs the assessment and reports the results to the Office of Institutional Research, reporting the number taking the assessment and the number achieving the established target level. This information is then aggregated into an Institutional Science and Technology CLO assessment result, allowing program flexibility in determining an appropriate technical standards assessment, while assuring that Institutional Research can aggregate data into an Institutional-level achievement result.

Problem Solving/Critical Thinking

The Celebrating Learning Team (CLT) developed a problem-solving/critical thinking rubric that is easily adaptable to the needs of individual program areas. Each program uses this common rubric to score an appropriate problem-solving assessment for their program area. These results are then aggregated by the Office of Institutional Research for the Institutional-level problem solving/critical thinking assessment.

Communication

Southeast Tech has developed a Writing Across the Curriculum (WAC) communication assessment based on program student writing samples within a range of appropriate writing assignments as determined by programs and approved by the English department faculty to assure the writing sample meets requirements for assessment. The samples are scored based on a WAC rubric designed by the CLT with input from English faculty. Results are provided to the Office of Institutional Research to be aggregated into the Institutional-level communication assessment.

Professionalism

In Spring 2016, Southeast Tech piloted its Institutional-level professionalism assessment. The CLT created a rubric that is adaptable to all program areas. Programs then conducted the assessment with results returned to the Office of Institutional Research to be aggregated into Institutional-level results.

For all assessments, the Office of Institutional Research and CLT develops and publishes on STInet reports providing aggregated and disaggregated analysis and results. The CLT also disseminates the report to all employees through email and on the STInet site. CLT members discussed individual program results with program faculty as requested.

1R1 Common Learning Outcome Results

What are the results for determining if students possess the knowledge, skills, and abilities that are expected at each degree

level?

- Summary results of measures (include tables and figures when possible)

- Comparison of results with internal targets and external benchmarks

- Interpretation of results and insights gained

Summary Results of Measures AND Comparison of Results with Internal Targets and External Benchmarks AND Interpretation of Results and Insights Gained

Assessment Results Timeline History

In 2004-2005, Southeast Tech began the process of developing Institutional-level CLO assessments. However, the Institute realized that it could not begin assessments in all four areas at the same time; therefore, the HLC Assessment Committee (now CLT) reviewed the 2001 and 2003 Employer Survey results (Table 1R1.3), which indicated that employers rated graduate communication skills lower than the other three CLO’s. (While the committee recognized the fact that problem-solving skills rated lower than communication skills in 2001, a significant change occurred in that skill rating in 2003. Therefore, the committee focused on communication and decided to wait for future survey results on problem-solving skills.)

Science and Technology

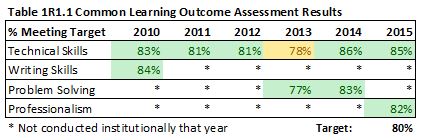

As indicated in Table 1R1.1, Southeast Tech has been conducting science and technology skill achievement (Technical Skills) for many years. In fact, these assessments have been conducted since the 1990’s.

2010-2016 Analysis and Insights: Because technical skills vary greatly by program, each individual program uses their individual results for analysis and improvement (1P 2 and 1R 2 ). At the Institutional level, these results are documented and analyzed by the Celebrating Learning Team to assure that technical skill attainment, which is key to the mission of the Institute, is reaching the established target (80%). For the past six years, the Institute has met or exceeded the target except in 2013-2014 where it fell to 78%. While no specific reason was determined regarding the drop, the Institute returned to above target results the following year and maintained that level in 2015-2016. (Note: 2016-2017 results will be available in summer/fall 2017.)

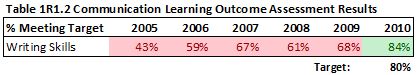

Communication

In 2005, the Institute conducted a Writing Across the Curriculum (WAC) assessment pilot. Student writing samples were gathered from all divisions and were analyzed and rated by Southeast Tech English faculty using a Likert scale rubric. An overall rubric score of 22 was determined by the HLC Assessment Committee to represent achievement of the student performance target. The score represented a 69% average on the seven WAC writing categories, which the committee determined would indicate an “average/acceptable score” for workplace writing competence. The Institute continued with the assessment until the results reached the established target level (Table 1R1.2).

2005 Analysis and Insights: As a pilot, Southeast Tech was satisfied with the initial results; however, through a reflection of the process, it was determined that incomplete directions were given to faculty regarding the student writing samples. Clearer direction was necessary to assure that the assessment process targeted student writing consistently across campus. Training and cle arer direction was provided through monthly meetings and individual program assistance by the HLC Assessment Committee. arer direction was provided through monthly meetings and individual program assistance by the HLC Assessment Committee.

2006-2008 Analysis Insights: Throughout the next three years, Southeast Tech’s HLC Assessment Committee continued to tweak the WAC assessment, providing faculty in-service presentations on writing, discussions on writing within program areas, and increasing program participation in the assessment process.

2009 Analysis Insights: The HLC Assessment Committee felt that by 2009 the Institute had developed a solid CLO writing assessment. Programs found the assessment helpful in building and evaluating student writing ability and made curriculum and other changes accordingly. The results and changes were shared at faculty in-service sessions. As a result of analyzing trends and level of student achievement in writing, for example, program faculty became more aware of the need to emphasize the importance of written composition as evidenced by the fact that more writing assignments were introduced into program curriculum, and more program faculty considered writing quality when grading assignments. Throughout this process, Southeast Tech built an awareness of the importance of writing on campus and in the workplace.

2010 Analysis Insights: In 2010, Southeast Tech met its CLO writing assessment target. With a successful implementation completed, the Institute moved into the development and deployment of assessments for the remaining CLO’s.

Problem Solving/Critical Thinking

Having successfully deployed a Communication CLO assessment, the Institute again reviewed employer survey results for 2009 and 2011 to determine which CLO to target next. As shown in Table 1R1.3, problem-solving skills showed the most opportunity for employer satisfaction improvement. A problem-solving assessment rubric and assessment process were developed in 2011-2012 with a pilot conducted in 2012-2013. After revision and reflection by the CLT, a follow-up assessment was conducted in 2013-2014. Similar to the communications assessments, programs collected student work on problem solving skills appropriate for their career area, scored the work based on a common rubric, and submitted the scores to the Office of Institutional Research, which aggregated the results and presented them to the Celebrating Learning Team for review. Final reports on both years of the problem-solving assessment were provided to stakeholders for further review, reflection, and action.

2013-2014 Analysis and Insights: The CLT used the 2012-2013 pilot problem-solving assessment to determine the effectiveness of the rubric and the associated assessment process. Overall, the Team felt that both the rubric and process were effective in assessing student problem-solving skills. The CLT decided to conduct a full problem-solving assessment the following year and use the two sets of results for a full analysis. The CLT also set as its target that “90% of assessed students will score at a 15 or higher on the established rubric.” A score of 15 would require the student to score an average of a 3 Agree (proficient) on each of the five assessed areas.

2014-2015 Analysis and Insights: Thirty-five programs, representing approximately 80% of all Institute programs, completed the problem-solving assessment as well as five general education areas (Math, English, General Psychology, Sociology, and Speech). For those areas participating in both assessments, comparison data was provided for each year. As an Institute, 80% (493 out of 614) of the assessed students reached the target level (Table 1R1.1), an increase of 3% over the pilot assessment’s 77% (573 out of 746). Each program was provided the specific comparison data related to their program students. Overall, 54% of the areas reached “90% of target or higher”. While the Institute did not reach its set target, the CLT set the target high in order to push for continuous improvement in problem-solving.

Analyzing the results in terms of each part of the rubric, the Institute found that “selecting the best solution” and “analyzing solutions” had the greatest opportunity for improvement. The April 2015 ”Problem Solving Assessment Report” was provided to all program areas with instructions to use the data for further area improvement (1I 1 ).

Professionalism

In Spring 2016, Southeast Tech conducted a pilot Institutional professionalism assessment that included 13 Southeast Tech programs from across the campus. A total of 134 students were assessed.

2015-2016 Analysis and Insights: Of the five professionalism rubric areas, commitment to lifelong learning appeared to be the most common opportunity for improvement; however, this area is also more difficult to quantify and evaluate, and will require the Institute to discuss more in-depth what constitutes a true measure of lifelong learning. Overall, the results did meet the target and are indicators that the Institute is meeting its professionalism CLO (Table 1R1.1). Programs were provided with their individual program results to use for program-level. The Institute is currently conducting the professionalism assessment for a campus-wide measure. The CLO will review these results in summer 2017 and determine future action steps at that time.

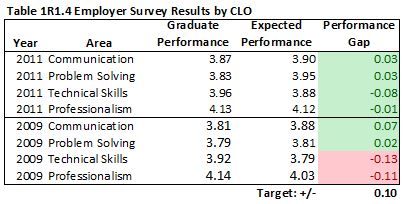

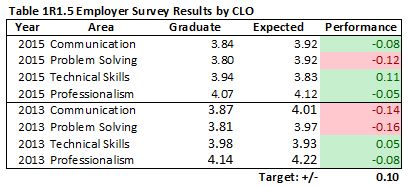

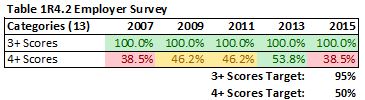

Employer Surveys

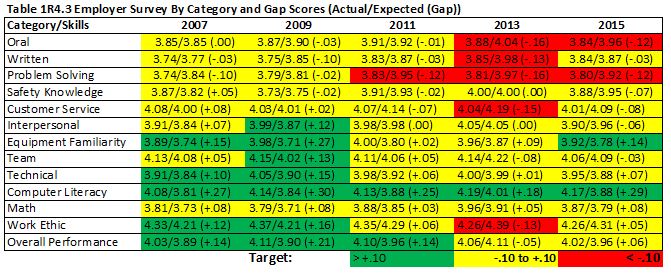

As part of its Employer Survey conducted every other year, Southeast Tech asks employers to rate its most recent graduates (past two years) in a variety of categories. These results, which are directly related to the four common learning outcomes, provide additional support regarding common learning outcome achievement and played a significant role in the Institute’s determination regarding the order for measuring the CLO’s.

Employers are asked to indicate what they expected the graduate performance level to be and the actual performance level for each area.

For Tables 1R1.3, 1R1.4, and 1R1.5, the first number by the CLO indicates the graduates’ actual performance level average. The second number indicates the employer’s expected performance level average. The number in parentheses indicates if the graduate exceeded expectations (+) or not (-). The Southeast Tech goal is for the gap between actual and expected performance to be within +/- .10.

In all three tables, Southeast Tech has found that the Institute is doing an excellent job of meeting employer needs as related to the CLO’s.

2015-2016 Analysis and Insights: Both Table 1R1.3 and Table 1R1.4 are discussed in the sections above in relation to the CLO’s. Results for both the 2013 and 2015 employer survey (Table 1R1.5) indicate a larger negative gap between actual and expected performance for the Problem Solving CLO (-0.14 and -0.12 respectively). While the overall graduate performance scores are similar or even higher than previous years, employer expectations have risen as well, causing Communication and Problem Solving to have a negative gap (-.14 and -.16 respectively) in spring 2013, and again in problem solving (-.12) in spring 2015. As part of the Strategic Plan (4P 2 ), the Institute will be gathering employer data on the CLO’s to assure that the CLO’s are still meeting industry needs. Additionally, in spring 2017 Southeast Tech initiated sector breakfasts to gather direct employer expectations of the Institute and its graduates to better meet their needs. Finally, the Institute conducted its Employer Survey again during spring/summer 2017.

During summer 2017, the Office of Institutional Research will 1. collect and develop a report of the Employer Survey data; 2. collect, with the Celebrating Learning Team, information from employers on the CLO’s and any additions/adjustments based on employer and internal stakeholder input, and 3. gather the data from the sector breakfasts. Once collected, all three will be presented to the CLT for potential review and revision of the CLO’s and to the External Stakeholders Relationships Team for improvements in services and educational offerings to industry (3P 3 ).

1I1 Common Learning Outcome Improvements

Based on 1R 1 , what improvements have been implemented or will be implemented in the next one to three years? (4.B.3)

Improvements at the Institutional level:

- Created and implement the Online Student Support position in 2013 to further support online student academic performance and assure CLO across all modalities

- Developed and implemented a common problem-solving and professionalism rubric (CLT) to provide a consistent CLO assessment method and move these assessments from the program to the Institute level. The Institute has established outcomes that are aggregated campus-wide and disaggregated by program.

- Began review process of CLO’s to assure the CLO’s directly align with the Institute’s new mission statement and meet stakeholder needs. The review will be completed in summer 2017. To accomplish this, the CLT developed a survey for faculty to use with Advisory Committees to gather input regarding CLO’s. The survey was also sent to non-advisory stakeholders for input through our Career Connections software. Gathered data will be analyzed by the CLT, which will revise the current CLO’s as needed and make its recommendations to the Futures Team, which will review the CLO’s, make further adjustments, and send to the Administrative Team. The process will continue from the Administrative Team to the Council and finally to the Board, which will adopt new CLO’s for the Institute. Category 1 page 10

- The Institute’s second all-campus Diversity Fair was held in April 2017. The fair’s booths represented cultural, ethnic, gender, religious, sexual orientation, and other forms of diversity. Booth representatives shared their stories, experiences, talents and traditions with the campus community. The Institute’s goal is to hold the Diversity Fair every other year.

Examples of improvements at the program and department levels include:

- Since 2010, the Construction Management program has made significant changes to its curriculum based upon industry standards, the needs expressed by the Advisory Committee and the desire to improve student achievement on the Communication CLO. These curriculum changes included BUS 130 Business Communications, which has helped program students gain more confidence in their communication skills.

- Based on student feedback, peer listening sessions, and assessments of student learning, the Automotive program integrated more technology assistance into the classroom experience. Online testing and assignments have been implemented since 2010 with tests now almost exclusively completed online. Technology has also been used to record video of procedures and shop projects to better assist students and help them meet assessment requirements. Cameras and video display is also used in the lab so students can better view and detail live demonstrations. These changes were based on the program’s efforts to better meet the Institute’s Communication CLO.

- The Automotive program added more industry certifications embedded into the program’s curriculum, providing more opportunities to assure technical skill standards are met through industry-recognized assessments.

- To improve student performance for the Institute’s Professionalism CLO and based on Advisory Committee input, the Automotive program now requires students to wear uniforms and assesses student safety, work ethic and overall professionalism levels daily.

- To improve mastery in Problem Solving skills, the General Education Mathematics department implemented the Hawkes Learning Systems software in 2015, which promotes mastery-level learning and offers immediate feedback with an Artificial Intelligence component. The department also implemented Math 099 in Fall 2012, a co-requisite course taken with Math 101 Intermediate Algebra and/or Math 102 College Algebra to provide students with additional support and resources.

- To improve mastery in Communication skills, the General Education English department implemented ENGL 099, a co-requisite course taken with ENGL 101 Composition to provide students with additional support and resources.

Over the next three years, Southeast Tech’s priorities for improving Common Learning Outcome results include:

- Introducing (2017-2018) through the CLT the new CLO’s to the Southeast Tech community and begin work on Program Learning Outcome (PLO) review (1P2).

- Strengthening co-curricular programming and assessment through full implementation of the assessment and reporting process by the SGA/Student Activities Coordinator

- Embedding CLO assessments into program curriculum and striving for 100% program participation through the efforts of the CLT.

1P2 Program Learning Outcomes

Program Learning Outcomes focuses on the knowledge, skills, and abilities graduates from particular programs are expected to possess. Describe the processes for determining, communicating, and ensuring the stated program learning outcomes and who is involved in those processes. This includes, but is not limited to, descriptions of key processes for:

- Aligning program learning outcomes to the mission, educational offerings, and degree levels of the institution (3.E.2)

- Determining program outcomes (4.B.4)

- Ensuring the outcomes remain relevant and aligned with student, workplace, and societal needs (3.B.4)

- Articulating the purposes, content, and level of achievement of the outcomes (4.B.1)

- Designing, aligning, and delivering co-curricular activities to support learning (3.E.1, 4.B.2)

- Selecting tools/methods/instruments used to assess attainment of program learning outcomes (4.B.2)

- Assessing program learning outcomes (4.B.1, 4.B.2, 4.B.4)

Program Learning Outcomes Process: A Brief History

Since 1995, Southeast Tech has conducted assessments on program learning outcomes. It is the Institute’s goal that every program conducts a program-level assessment on an annual basis. Prior to the creation of the Celebrating Learning Team (CLT) (1P 1 ), Southeast Tech relied on an Assessment Coordinator and the Office of Institutional Research to assist faculty in developing, conducting and analyzing program assessments. While this initial approach provided the Institute with the ability to begin its program assessment process, Southeast Tech recognized the need to broaden responsibility for assessment, resulting in the creation of the CLT. The CLT has now developed a full process for both common and program learning outcome assessments.

Aligning Program Learning Outcomes to the Mission, Educational Offerings, and Degree Levels of the Institution AND Determining Program Outcomes

The formal process for determining and assuring alignment of Program Learning Outcomes (PLO’s) occurs after the alignment of the CLO’s to the new/revised Strategic Plan, mission, vision, and values. Because PLO’s are categorized under the Institutional CLO’s, waiting for any CLO changes assures that CLO adjustments are accounted for during the alignment and revision of the PLO’s. PLO revision and alignment, therefore, begins toward the end of the first year of the strategic plan and is completed prior to the end of the second plan year (1P 1 Figure 1P1.1).

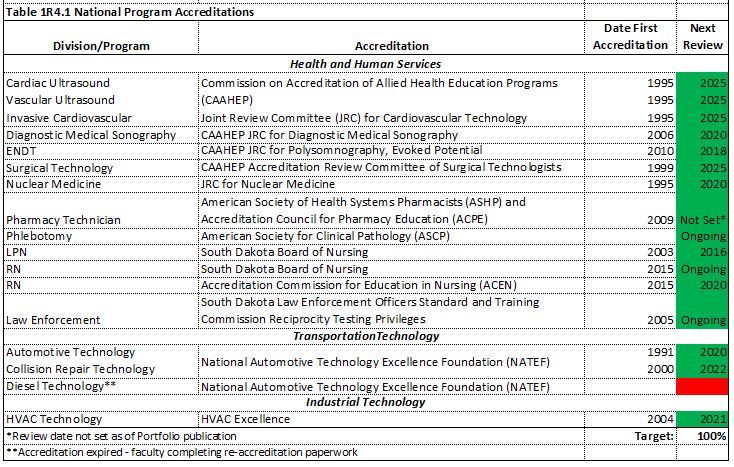

Program faculty are charged by administration with developing specific PLO’s for their particular programs. External stakeholders are given the opportunity to provide input on PLO’s through the Employer Survey, which is disaggregated by program for program faculty review, and through the program’s Advisory Committee. Programs with external accreditations specific to their field utilize these requirements/standards in the development of their PLO’s. Determining the methods for assessing student PLO attainment, and the assessment of attainment, is the responsibility of program faculty with support and guidance from the immediate supervisor and the CLT. Assessment methods and results are provided in 1R 2 . (4.B.4)

The CLT provides support to programs and assures that programs complete the alignment process by requiring notification of completion as well as a report of new/revised PLO’s. The PLO’s are communicated through the Southeast Tech Catalog and are available on the Southeast Tech website for stakeholder viewing. All PLO’s are approved by the Academic Administrator who oversees that program area. Upon approval of the PLO’s, the Vice President of Student Affairs and Director of Students work with the departments to communicate the PLO’s to all Southeast Tech stakeholders.

While Southeast Tech’s mission is to educate for employment, the Institute makes no explicit claim to students regarding job placement; however, Southeast Tech strongly believes that it fulfills its mission of educating for employment and meet any unspoken claims regarding contributions the Institute may provide to student’s educational experience based on high graduate placement rates (1R 2 ). (3.E.2)

Ensuring Program Learning Outcomes Remain Relevant and Aligned with Student, Workplace, and Societal Needs

Program faculty ensure that their learning outcomes are relevant and aligned to student, workplace, and societal needs in a number of ways: interactions with business and industry representatives, bi-annual Advisory Committee meetings, external accreditation reviews, internal inservice learning outcome work sessions, participation in professional organizations and conferences, analysis of Employer Survey results and graduate placement rates. The use of external stakeholder input is essential in assuring an objective review of the relevance of program learning outcomes that meet industry and community needs. Review of the various inputs and revisions of PLO’s are reported in Advisory Committee meeting minutes and reviewed by an Academic Administrator to assure the review occurs and adjustments are completed. During program meetings with the Southeast Tech Academic Administrative Team, adjustments to PLO’s are made as needed. If an entire course or program change is determined necessary, the change must go through the Institute’s Curriculum Committee process (1P 4 ).

Because the respect for diversity is one of Southeast Tech’s core values, awareness of the human and cultural diversity of a global society is incorporated into our Professionalism CLO and is included in instruction as learning outcomes within the general education Social Issues class, program coursework, and the Student Success Seminar course. Diversity events, activities, and/or communications are provided annually. (3.B.4)

Articulating the Purposes, Content, and Level of Achievement of Program Learning Outcomes

Program Learning Outcomes are articulated to all stakeholders on the program curriculum pages of the Southeast Tech Catalog, which is located on the Southeast Tech website. PLO’s are also provided on standardized syllabi according to the outcomes associated with that particular course. During JumpStart orientation days, program students review PLO’s with program faculty prior to beginning their program of study. PLO’s are also frequently reviewed with students during Academic Advising. (4.B.1)

Designing, Aligning, and Delivering Co-curricular Activities to Support Learning

As described in 1P 1 Common Learning Outcomes, Southeast Tech has a number of co-curricular activities directly linked to specific career areas. These professional student organizations provide student exposure to their career field as well as opportunities to participate in state, regional and national conferences and competitions and to network with potential employers. Currently, Southeast Tech has sixteen student program organizations. The program associated with the organization is listed in parentheses. (4.B.2)

-

AITP (IT programs)

-

Animation Technology Artisans (Digital Media Production)

-

Civil Engineering Technology Student Organizations (Civil Engineering Tech and Land Surveying)

-

Construction Management Student Organization (Construction Management)

-

Dakota Turf/Golf Course Superintendent Association of America (Sports Turf Management)

-

Early Childhood Student Organization (Early Childhood)

-

Electroneurodiagnostic Technology Student Organization (ENDT)

-

Law Enforcement Student Organization (Law Enforcement)

-

National Association of Landscape Professionals (Horticulture programs)

-

Nuclear Medicine Student Organization (Nuclear Medicine)

-

SkillsUSA (Automotive and various other programs)

-

Society of Manufacturing Engineers (Mechanical Engineering Tech)

-

South Dakota Advertising Federation (Media Design)

-

Southeast Tech Student HVAC Association (HVAC)

-

Student Chapter of the Sioux Falls Home Builder’s Association (Architectural Engineering)

-

Student Practical Nurses Association (LPN/RN)

All student organizations (and Southeast Tech clubs) must meet specific requirements as defined in 1P 1 . (3.E.1)

Selecting Tools/Methods/Instruments Used to Assess Attainment of Program Learning Outcomes

Faculty select various assessment tools and conduct assessments based on standards for their specialized fields. Because program faculty have worked in the professions they teach and continue to have direct ties to the industry through professional organizations, they are in the best position to know the needs of their industry and the type of assessment that works best in their field. Therefore, every program selects its own assessment tool and conducts its own assessments (1R 1 Proving Student Learning Chart). Faculty select various assessment tools and conduct assessments based on standards for their specialized fields. Because program faculty have worked in the professions they teach and continue to have direct ties to the industry through professional organizations, they are in the best position to know the needs of their industry and the type of assessment that works best in their field. Therefore, every program selects its own assessment tool and conducts its own assessments (1R 1 Proving Student Learning Chart).

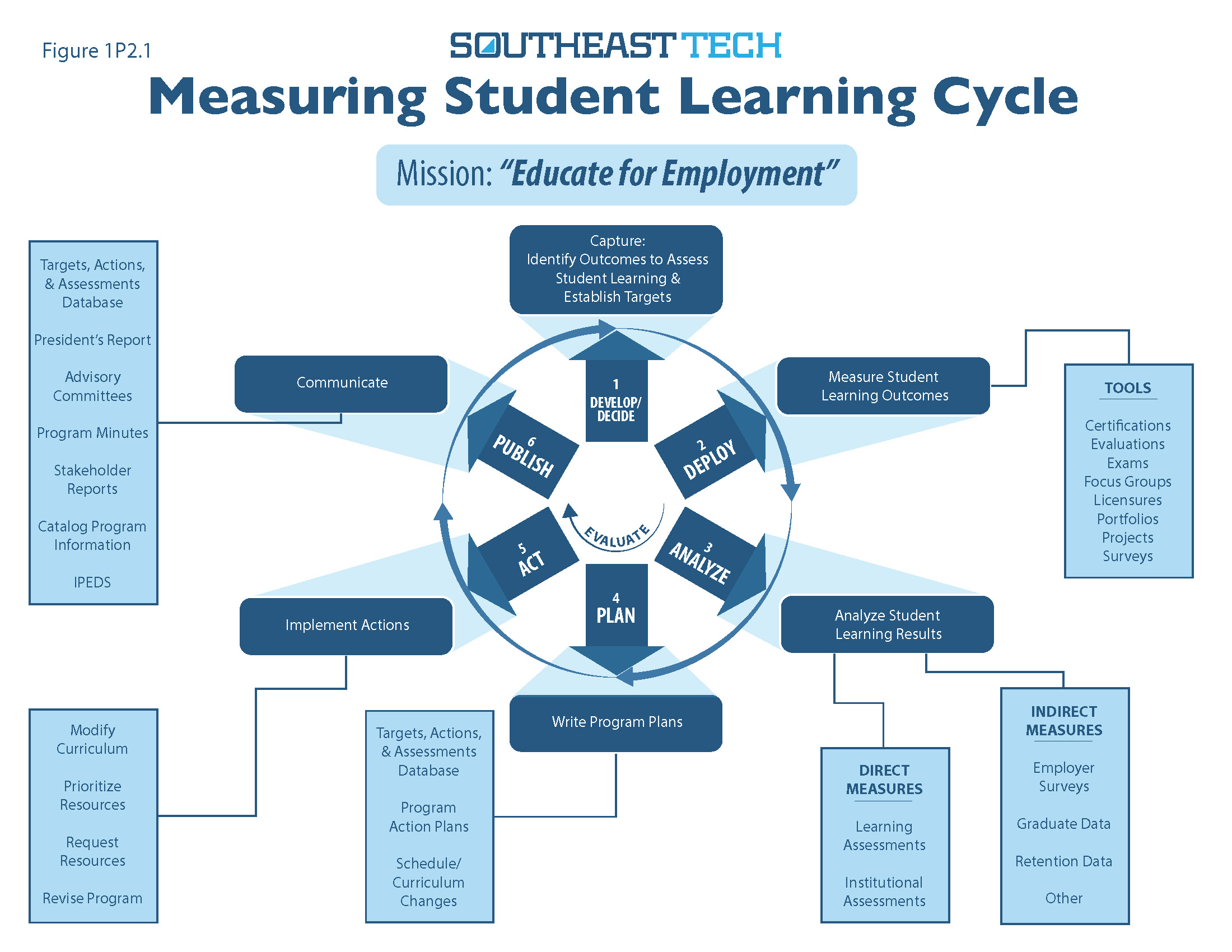

Program faculty then use the “Measuring Student Learning Cycle” to develop and implement their program assessments (Figure 2P1.1)

1. Capture/Develop/Decide: Program faculty take inputs from Advisory Committees, stakeholder surveys (Employer, Graduate and Student), common learning outcome assessments, etc. and use the information to develop program outcome assessments and assessment targets. The assessment tool and associated targets are then reviewed by the CLT and the program’s Academic Administrator.

2. Deploy: Program outcomes are then measured through an assessment tool chosen or developed by program faculty. Common tools are certifications/licensures, program evaluations, portfolios, projects, etc. Program faculty conduct the assessment and gather the results.

3. Evaluate: Assessment analysis against the established targets is conducted by the program faculty with assistance as needed by the CLT and Office of Institutional Research. Analysis includes the direct measures developed in step 1, but it may also include indirect measures such as employer surveys and graduate and retention data. The analysis is documented in the Planning and Assessments database.

4. Plan: From the assessment, plans for improvement are developed and documented in the Planning and Assessments database, including timelines for completion, which become part of the Annual Planning process (4P 2 ). (4.B.1, 4.B.3, 4.B.4)

5. Act: The plans in Step 4 are then implemented and may result in modified curriculum, requests for more resources, adjustments in entrance or graduation requirements, etc.

6. Communicate/Publish: Annual outcomes are documented in the Planning and Assessments database and may also become part of Advisory Committee minutes, the President’s report, State Program Review, etc. The cycle then begins again at Step 1.

To assure that faculty have the time to complete assessments and assessment reports, Southeast Tech utilizes faculty non-student contact days. Faculty can use one or more of these days to complete assessment requirements and develop assessment and improvement plans. Training on assessment occurs during new faculty training, in-service and workshop sessions, and during monthly building meetings. (4.B.1, 4.B.2, 4.B.3, 4.B.4)

Assessing Program Learning Outcomes

Southeast Tech measures program learning outcomes on an individual program level through the following direct measures:

Program Level Assessment: Every program has developed a program-specific outcome assessment tool based on what faculty, with Advisory Committee input and Academic Administrative approval, believe to be the most appropriate assessment for their particular career field. These may include project/presentation scores, certification/licensure pass rates, portfolios, pre/post-testing, etc. (Figure 1R2.1 Proving Student Learning).

Licensures and Certifications: Many Southeast Tech students take national licensure or certifications associated with their program areas, a direct measure of student academic achievement.

Indirect measures are also used to measure program learning outcomes:

- Southeast Tech’s employer survey is disaggregated by program with results available to program faculty for review and analysis. Categories on the survey directly relate to learning outcomes and provide an employer assessment of graduate performance (1R 4 ).

- Though not as strong of an outcome indicator, employment rates also provide general information regarding graduate performance. A low program employment rate may suggest that employers are not satisfied with the ability of the program graduates, indicating that the Institute needs to conduct further analysis of its curriculum and assessment processes (1R 4 ).

The results of all of these measures are analyzed at the program level and documented in the Planning and Assessments database.

1R2 Program Learning Outcome Results

What are the results for determining if students possess the knowledge, skills, and abilities that are expected in programs?

• Outcomes/measures tracked and tools utilized

• Overall levels of deployment of assessment processes within the institution

• Summary results of assessments (include tables and figures when possible)

• Comparison of results with internal targets and external benchmarks

• Interpretation of assessment results and insights gained

Overall Levels of Deployment of Assessment Processes Within the Institution

Southeast Tech has a long history of deploying program-level assessments dating back to the 1990s. The Institute uses direct assessments of student learning at the pre-program, program summative and program completion levels (Figure 1R2.1). The pre-program level provides the Institute with entry-student program readiness (entrance requirements) and placement results (English, math and reading). While not used for program level assessment, proper entrance and placement requirements help the Institute assure program level outcomes are achieved.

Program-level assessment is accomplished at the Summative and Program Completion levels. As shown in Figure 1R2.1, all Southeast Tech programs establish specific program-level assessments with some programs conducting more than one.

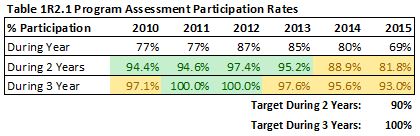

It is Southeast Tech’s goal that programs conduct their assessments on an annual basis; however, due to major changes in program curriculum, faculty, or other factors, not every program is able to conduct their assessment every year.

To assure that assessments are completed on a regular basis, Southeast Tech’s target is for 100% of programs to complete a program assessment a minimum of once every three years and 90% a minimum of once every two years. Table 1R2.1 provides the percentage of programs meeting these two targets.

While Southeast Tech has met the “During 3 Year” target in 2011 and 2012, and the “During 2 Year” target in 2010-2013, overall participation in program assessment has fallen to within 90% of target for both 2014 and 2015. This is partly due to the addition of new programming and new faculty who are currently working to develop program-level assessments. However, some of the discrepancy is also due to programs not completing or not documenting their assessments.

Programs not meeting the participation targets are assisted by the Celebrating Learning Team to get the program’s assessments back on track. The Institute recognizes that new programs and new faculty are in need of additional training and assistance; therefore, the Institute has included this area for future improvements (1I 2 ).

Summary Results of Assessments AND Comparison of Results with Internal Targets and External Benchmarks, AND Insights Gained

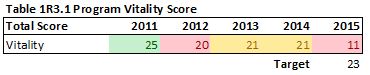

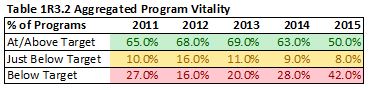

Program Level Assessment: Each program analyzes its own specific program assessment in order to determine if its target level is being met. While it is not possible to show each program’s results within the portfolio, Table 1R2.2 provides the percentage of programs meeting their program-specific targets (green) as well as the percentage within 90% of target (yellow) and the percentage below 90% of target (red).

2010-2016 Analysis and Insights: While the initial reaction to only 66% (year 2015-2016) of programs meeting their assessment targets might be negative, a review of these targets indicates that programs have set significantly high expectations. These expectations not only challenge the students, it challenges the program to determine ways to meet the assessment expectations. While it would be easier to set lower targets that can be easily obtained, setting stretch targets has led the Institute to more significant improvements at the program level  (1I 2 ). (1I 2 ).

Licensures and Certifications: Twenty Southeast Tech programs are associated with one or more state or national licensures/certifications, which provide the Institute with an additional assessment of student learning at the program level, and in many cases the opportunity for national benchmarking. As Table 1R2.3 shows, Southeast Tech students regularly perform above the national average, meeting the Institutional target.

2010-2016 Analysis and Insights: Southeast Tech is pleased with the certification results, which are consistently above national pass rates. No Institute actions are planned; however, individual programs review results and set actions accordingly.

Employer Survey: Employer survey results are provided in 1R 4 as indicators of program quality; however, these results are also indicators of meeting program learning outcomes. Each program receives disaggregated data from the employer survey, which can then be used as an indirect measure of program assessment in thirteen areas: oral and written communication, customer service, interpersonal, problem solving, equipment familiarity, team, technical, safety, computer literacy, math and work ethic skills as well as overall performance.

Analysis and Insights: While providing individual program employer survey data within this portfolio is not possible, Southeast Tech graduates receive high praise from employers in almost every survey category regardless of program, generally meeting or outperforming employer expectations. In relation to program learning outcomes, the 1R4 Table 1R4.2 indicates the percentage of programs with scores in the specific survey categories that average at the 3+ and 4+ ranking on a five point rating scale. Table 1R4.3 provides evidence that a positive difference between actual and expected performance exists for the majority of the categories. Programs each use disaggregated data to develop action plans to better meet employer survey needs. Southeast Tech is currently collecting and analyzing the results of its 2017 Employer Survey, which will be completed Summer 2017.

Employment Rates: As a technical institute with a mission of educating for employment, graduate employment rates provide an additional indirect measure of student program outcome achievement. Employment rates are also indicators of program quality; therefore, employment rates are provided in 1R 4 .

Analysis and Insights: A review of the Institute’s six-month graduate employment rates, which have been consistently at 90+% for employment and at 89+% for employment in field/related field, provides a strong indirect measure that the Institute is meeting its mission.

1I2 Program Learning Outcome Improvements

Based on 1R 2 , what improvements have been implemented or will be implemented in the next one to three years? (4.B.3)

Improvement at the Institutional level:

- Increased understanding across campus regarding the importance of program assessment and its role in continuous quality improvement;

- A knowledgeable Celebrating Learning Team that can assist program faculty with assessments and monitor assessment activity;

- The expansion of the Celebrating Learning Team to include staff members and department assessments.

Examples of Improvements at the program level include:

The Mechanical Engineering Technology program added eight Afinia 3D printers and switched to SolidWorks as its main modeling software to better align program training with local employers, based on industry standards, Advisory Committee input, and technical assessment results.

The Automotive program’s new lab (Fall 2016) increased the program’s ability to meet student needs by providing more lab space and better lab environment. Students can now work in groups of two instead of three, increasing learning opportunities. In addition, the program added new equipment, based on the prompting of industry. Industry and the Advisory Committee have also been requesting more program graduates, and the program has had a long history of student wait lists for the program. The new facility has allowed the Institute to increase student program enrollments to better meet industry needs. More hands-on opportunities for students has provided students more opportunities to improve their technical skills as well.

The System Administrator program now has a $110,000 NetLab virtual lab pod, which creates an online lab environment that can be accessed anywhere in the world. It is used for the program’s VMware vSphere training. The Advisory Committee recommended that the program teach vSphere. VMware is the industry standard for virtualization platforms for both medium and large businesses. Southeast Tech’s faculty recognized the need to include this skill in its curriculum and administration assured that the instructor received the necessary training. In addition to improving curriculum, the change has helped many students obtain jobs specifically because of their training in VMware.

Beginning in Fall 2014, the Surgical Technology program changed from a one year diploma program to a two year AAS program based on its national accreditation review and student feedback. The program’s national accrediting body, CAAHEP (Commission on Accreditation of Allied Health Education Programs) determined that surgical technology programs should award a minimum of an Associate’s Degree by August 1, 2021. However, Southeast Tech escalated the timeline based on satisfaction surveys of graduates nine months after program completion. Over the past three years of graduate satisfaction survey results, a common suggestion among graduates (18 out of 30 or 60% of graduates) voiced their agreement that in order to meet training needs of the profession, the additional year of coursework was appropriate due to the large content-driven curriculum.

Based on results of its program learning assessments, the Biomedical Equipment Technology program implemented weekly assessment testing, similar to the national testing process. The change improved student performance on the program’s learning assessments.

The Early Childhood program, based on data collected from student requests and industry needs, introduced a hybrid program in Fall 2011. The hybrid option was later changed from weekend offerings to week day offerings at the request of students to accommodate student family needs. Class sizes were increased as well to meet industry needs, and to increase student lab experiences and improve assessment results, the on-campus childcare center was added as a ”lab” environment site, giving students more hands-on experiences with children in order to improve program learning outcomes. Students work at the center for one-hour per week or 14 weeks in the first and second semesters. The center’s staff serve as mentors to the Early Childhood students, strengthening the skills for both the staff and the students. In Fall 2013, a remodeled location was dedicated to the program, providing a lab experience designed specifically for the program. To meet increased student numbers and retain program quality, an additional faculty member was added in Fall 2014. To provide a better opportunity for students to continue in the program even after a failed semester, the Institute moved the hybrid program into a spring start (Spring 2015), allowing new students the opportunity to start in January and current students to retake failed courses without having to wait until August of the following year. The change also created the opportunity for non-traditional students to attend courses part time and continue to work during their enrollment.

While most programs are laptop based, Southeast Tech’s Invasive Cardiovascular Technology and Surgical Technology programs opted for an IPAD based classroom due to the opportunities the IPADs provide for graphic displays of human anatomy and learning apps that apply to their program areas.

Over the next three years, Southeast Tech’s priorities for improving Program Learning Outcome results include:

- Assuring that a CLT member is immediately assigned to all new programs and new faculty to provide direct assessment mentoring.

- Providing basic assessment concept training as part of the new employee onboarding course (3P1) to assure all employees understand CLO and PLO assessments.

- Providing a more in-depth training to new faculty as part of the faculty training program (3P1).

1P3 Academic Program Design

1P3 Academic Program Design

Academic Program Design focuses on developing and revising programs to meet stakeholders’ needs. Describe the processes for ensuring new and current programs meet the needs of the institution and its diverse stakeholders. This includes, but is not limited to, descriptions of key processes for:

- Identifying student stakeholder groups and determining their educational needs (1.C.1, 1.C.2)

- Identifying other key stakeholder groups and determining their needs (1.C.1, 1.C.2)

- Developing and improving responsive programming to meet all stakeholders’ needs (1.C.1, 1.C.2)

- Selecting the tools/methods/instruments used to assess the currency and effectiveness of academic programs

- Reviewing the viability of courses and programs and changing or discontinuing when necessary (4.A.1)

Identifying Student Stakeholder Groups and Determining Educational Needs

Note: Because Southeast Tech’s specific student stakeholder groups frequently become our key student groups (2P 1 ), the Institute has provided more detail on how it works with these student stakeholder groups in Category 2. In this section, the Institute will focus on the larger general population stakeholder groups we serve and how we meet their educational needs.

Southeast Tech’s current mission is to “educate for employment.” Therefore, our academic offerings are developed to specifically meet that mission, and the general student stakeholder groups we serve are those interested in pursuing educational options that lead to specific industry careers. Currently, the Institute offers the following options:

- Associate of Applied Science (AAS) degree programs: The AAS degree is designed to prepare students for a specific technical career and typically requires 60-75 credits for completion, including technical and general education courses. A full time student will generally complete the program in two years.

- Diploma programs: Diploma programs are also designed for entry-level positions in a specific technical career but typically require fewer credits for achievement and generally range from 30 to 45 credits with around five to nine general education credits. A full time student will typically complete a diploma program in one year or less. Diploma credits can frequently be used to fulfill AAS program requirements.

- Certificate programs: Southeast Tech currently offers a limited number of certificate programs, such as truck driving, which can generally be completed in a semester or less and require 16 or fewer credits or are non-credit based. Certificate programs are very focused and seldom include general education coursework. Certificate completion can frequently be used to fulfill requirements in diploma or AAS programs.

Capture: Given the mission of the Institute and the above program options, Southeast Tech begins the process of identifying general student stakeholder groups by capturing the inputs related to student stakeholder identification. The Office of Institutional Research collects these inputs, which include regional demographic data (age, race, economic status, educational levels), internal student population data (full/part time, age, race, economic status, transfer/first time, etc.), Employer Survey results, and graduate job placement rates and locations. Additional data is provided through internal and external stakeholder input, which may include environmental/workforce scans, unemployment rate data, potential incoming industries, etc. This information is frequently gathered through direct Institute connections with area Chambers of Commerce, Forward Sioux Falls, local school districts, state agencies, etc.

Develop: Once collected, data is reviewed by both the Student Success Team, Admissions and the Administrative Team. The Admissions department analyzes the data to determine what changes have taken place in terms of student recruitment - what student stakeholder groups are growing or shrinking, how regional demographics are changing, etc. This information is then used as part of the recruitment process to determine what adjustments are necessary to better recruit students interested in completing Institutional programs. The Administrative Team analyzes the data to determine how the community we are serving is changing and how we, as an Institute, can better meet community needs.

Decide: The Administrative Team, along with the Student Success Team and Admissions department, then determines what changes, if any, are necessary to the student stakeholder groups we serve and recruit. These changes are then implemented and become part of the data collection process for future review and analysis. Any changes to student stakeholder groups are communicated across campus to all employees through emails, direct discussions with those areas impacted by the change, and/or topics covered during monthly employee meetings. Each year, as new data becomes available, it is analyzed for changes and measured for impact. Student stakeholder groups are again reviewed and revised to determine any necessary changes.

Once student stakeholder groups are identified, the Institute determines the group’s educational needs. This process is described in full detail in 2P 1 and involves departments, programs and employees from across campus. In general terms, the new student stakeholder group is given to the Student Success Team and the Office of Institutional Research, who work together to determine the stakeholder group’s educational needs. This is done by capturing and analyzing the data that was used to determine the student stakeholder groups, data provided from various departments, programs, and individuals from across campus, and Institutional research, which includes student retention, graduation rates, certificate completion, certifications received, etc. (See 2P 1 for Southeast Tech’s process for deployment, evaluation, publishing/communicating, and reflection for meeting student stakeholder groups needs.)

Currently, the general population student stakeholders we serve, and the initial educational needs required of these groups, as well as a sample of the services we provide to meet those educational needs are:

- First Time Students: For students attending post-secondary education for the first time, the transition can be difficult. Students may have had strong support for pursuing higher education at home, but once the student moves to post-secondary education, that support may be far away and its impact may dwindle. Southeast Tech strives to assist these students by providing Institutional support through the Student Success Seminar course, Student Success and Academic Advisors, and JumpStart orientation days (2P 1 ).

- Transfer Students: Transfer students to Southeast Tech make up 35-40% of the Institute’s student population. These students need a simple, effective process for transferring courses as well as an understanding of how transfer may impact financial aid and time to graduation. Southeast Tech offers a transfer equivalency calculator, reviews of financial aid, and initial registration and transfer review by Admissions to assist these students (2P 1 ).

- Underprepared Students: Whether the underprepared student is first time or transfer, Southeast Tech strives to develop placement requirements that are specific to general education courses in order to assure that these students receive the initial assistance they need in order to be successful. Admissions staff assure students are placed appropriately and that initial student schedules are created to provide students the best opportunity for success. For students who have not yet received a GED or are in need of English as a Second Language (ESL) preparation prior to entry, Southeast Tech’s Hovland Learning Center provides these services. Finally, for those students who attend Southeast Tech but struggle academically, the Institute offers pre-academic coursework, peer tutoring, an Academic Recovery course and Student Success lab (2P 1 ). (1.C.1, 1.C.2)

- Full Time/Part Time Students: With a regional unemployment rate of less than 3%, almost all Southeast Tech students hold full or part time jobs. This requires the Institute to be as flexible as possible with program offerings, which has resulted in Southeast Tech offering online programs and courses whenever possible, as well as flexible traditional schedules to meet student needs (2P 1 ).

- Program-Specific Students: Every program has its own unique requirements and rigor; therefore, Southeast Tech has established specific program entrance requirements to assure students entering the program are ready to meet the academic challenges for that program (see 1P 4 for the entrance requirement process). For those students who do not meet these entrance requirements, Southeast Tech has developed numerous options, which may include completing a certificate or diploma program prior to entering an AAS program, or taking a program over an extended period of time.

While ESL students are listed under “Underprepared Students”, the Institute has determined that this student stakeholder group’s needs may differ enough from the underprepared category to warrant further attention. Therefore, the Institute has established a sub-committee of the Student Success Team and developed an AQIP Action Project to determine and address this student stakeholder group’s needs (1R 3 and 1I 3 ). Additional information on the above student stakeholder groups and other key student groups can be found in 2P 1 . (1.C.1, 1.C.2)

Identifying Other Key Stakeholder Groups and Determining Needs

Southeast Tech has always maintained excellent relationships with its non-student key stakeholder groups. However, the previous methods used to maintain these relationships was unaligned with other processes within the Institute. While these previous methods provided opportunities to identify new key stakeholder groups and their needs, they did not assure that all new groups were identified, needs were addressed in the most effective manner, or that these stakeholder relationships were communicated effectively across campus.

Therefore, in 2016-2017 Southeast Tech developed a stronger process for identifying key stakeholders, determining and meeting their needs, and developing stronger stakeholder relationships. This process is fully described in 2P 2 . The Institute also implemented a new AQIP team, called the External Stakeholder Relationships Team, that is dedicated to key stakeholder groups. This new team is described in 6P 2 .

See figure 2P3.1 for a list of the Institute’s currently identified non-student key stakeholder groups as well as how the Institute maintains these relationships and determines needs. The table also indicates the current identified needs of the specified key stakeholder group and the champion who internally assures that stakeholder connections are maintained and enhanced. Each champion is a member of the External Stakeholder Relationships Team to assure internal communications on key stakeholders occurs.

Developing and Improving Responsive Programming to Meet All Stakeholders’ Needs

Southeast Tech has long been recognized by its stakeholders for the Institute’s ability to respond to employer needs in a timely manner while assuring the learner receives the appropriate academic training and support required to meet industry need. This responsiveness and ability to meet all stakeholder needs is an Institutional advantage over four-year college competitors who cannot respond with the same agility.