1R1 Common Learning Outcome Results

What are the results for determining if students possess the knowledge, skills, and abilities that are expected at each degree

level?

- Summary results of measures (include tables and figures when possible)

- Comparison of results with internal targets and external benchmarks

- Interpretation of results and insights gained

Summary Results of Measures AND Comparison of Results with Internal Targets and External Benchmarks AND Interpretation of Results and Insights Gained

Assessment Results Timeline History

In 2004-2005, Southeast Tech began the process of developing Institutional-level CLO assessments. However, the Institute realized that it could not begin assessments in all four areas at the same time; therefore, the HLC Assessment Committee (now CLT) reviewed the 2001 and 2003 Employer Survey results (Table 1R1.3), which indicated that employers rated graduate communication skills lower than the other three CLO’s. (While the committee recognized the fact that problem-solving skills rated lower than communication skills in 2001, a significant change occurred in that skill rating in 2003. Therefore, the committee focused on communication and decided to wait for future survey results on problem-solving skills.)

Science and Technology

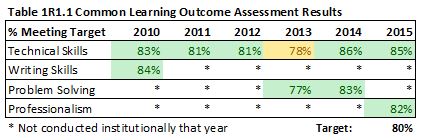

As indicated in Table 1R1.1, Southeast Tech has been conducting science and technology skill achievement (Technical Skills) for many years. In fact, these assessments have been conducted since the 1990’s.

2010-2016 Analysis and Insights: Because technical skills vary greatly by program, each individual program uses their individual results for analysis and improvement (1P 2 and 1R 2 ). At the Institutional level, these results are documented and analyzed by the Celebrating Learning Team to assure that technical skill attainment, which is key to the mission of the Institute, is reaching the established target (80%). For the past six years, the Institute has met or exceeded the target except in 2013-2014 where it fell to 78%. While no specific reason was determined regarding the drop, the Institute returned to above target results the following year and maintained that level in 2015-2016. (Note: 2016-2017 results will be available in summer/fall 2017.)

Communication

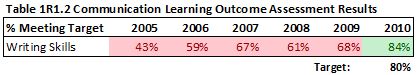

In 2005, the Institute conducted a Writing Across the Curriculum (WAC) assessment pilot. Student writing samples were gathered from all divisions and were analyzed and rated by Southeast Tech English faculty using a Likert scale rubric. An overall rubric score of 22 was determined by the HLC Assessment Committee to represent achievement of the student performance target. The score represented a 69% average on the seven WAC writing categories, which the committee determined would indicate an “average/acceptable score” for workplace writing competence. The Institute continued with the assessment until the results reached the established target level (Table 1R1.2).

2005 Analysis and Insights: As a pilot, Southeast Tech was satisfied with the initial results; however, through a reflection of the process, it was determined that incomplete directions were given to faculty regarding the student writing samples. Clearer direction was necessary to assure that the assessment process targeted student writing consistently across campus. Training and cle arer direction was provided through monthly meetings and individual program assistance by the HLC Assessment Committee. arer direction was provided through monthly meetings and individual program assistance by the HLC Assessment Committee.

2006-2008 Analysis Insights: Throughout the next three years, Southeast Tech’s HLC Assessment Committee continued to tweak the WAC assessment, providing faculty in-service presentations on writing, discussions on writing within program areas, and increasing program participation in the assessment process.

2009 Analysis Insights: The HLC Assessment Committee felt that by 2009 the Institute had developed a solid CLO writing assessment. Programs found the assessment helpful in building and evaluating student writing ability and made curriculum and other changes accordingly. The results and changes were shared at faculty in-service sessions. As a result of analyzing trends and level of student achievement in writing, for example, program faculty became more aware of the need to emphasize the importance of written composition as evidenced by the fact that more writing assignments were introduced into program curriculum, and more program faculty considered writing quality when grading assignments. Throughout this process, Southeast Tech built an awareness of the importance of writing on campus and in the workplace.

2010 Analysis Insights: In 2010, Southeast Tech met its CLO writing assessment target. With a successful implementation completed, the Institute moved into the development and deployment of assessments for the remaining CLO’s.

Problem Solving/Critical Thinking

Having successfully deployed a Communication CLO assessment, the Institute again reviewed employer survey results for 2009 and 2011 to determine which CLO to target next. As shown in Table 1R1.3, problem-solving skills showed the most opportunity for employer satisfaction improvement. A problem-solving assessment rubric and assessment process were developed in 2011-2012 with a pilot conducted in 2012-2013. After revision and reflection by the CLT, a follow-up assessment was conducted in 2013-2014. Similar to the communications assessments, programs collected student work on problem solving skills appropriate for their career area, scored the work based on a common rubric, and submitted the scores to the Office of Institutional Research, which aggregated the results and presented them to the Celebrating Learning Team for review. Final reports on both years of the problem-solving assessment were provided to stakeholders for further review, reflection, and action.

2013-2014 Analysis and Insights: The CLT used the 2012-2013 pilot problem-solving assessment to determine the effectiveness of the rubric and the associated assessment process. Overall, the Team felt that both the rubric and process were effective in assessing student problem-solving skills. The CLT decided to conduct a full problem-solving assessment the following year and use the two sets of results for a full analysis. The CLT also set as its target that “90% of assessed students will score at a 15 or higher on the established rubric.” A score of 15 would require the student to score an average of a 3 Agree (proficient) on each of the five assessed areas.

2014-2015 Analysis and Insights: Thirty-five programs, representing approximately 80% of all Institute programs, completed the problem-solving assessment as well as five general education areas (Math, English, General Psychology, Sociology, and Speech). For those areas participating in both assessments, comparison data was provided for each year. As an Institute, 80% (493 out of 614) of the assessed students reached the target level (Table 1R1.1), an increase of 3% over the pilot assessment’s 77% (573 out of 746). Each program was provided the specific comparison data related to their program students. Overall, 54% of the areas reached “90% of target or higher”. While the Institute did not reach its set target, the CLT set the target high in order to push for continuous improvement in problem-solving.

Analyzing the results in terms of each part of the rubric, the Institute found that “selecting the best solution” and “analyzing solutions” had the greatest opportunity for improvement. The April 2015 ”Problem Solving Assessment Report” was provided to all program areas with instructions to use the data for further area improvement (1I 1 ).

Professionalism

In Spring 2016, Southeast Tech conducted a pilot Institutional professionalism assessment that included 13 Southeast Tech programs from across the campus. A total of 134 students were assessed.

2015-2016 Analysis and Insights: Of the five professionalism rubric areas, commitment to lifelong learning appeared to be the most common opportunity for improvement; however, this area is also more difficult to quantify and evaluate, and will require the Institute to discuss more in-depth what constitutes a true measure of lifelong learning. Overall, the results did meet the target and are indicators that the Institute is meeting its professionalism CLO (Table 1R1.1). Programs were provided with their individual program results to use for program-level. The Institute is currently conducting the professionalism assessment for a campus-wide measure. The CLO will review these results in summer 2017 and determine future action steps at that time.

Employer Surveys

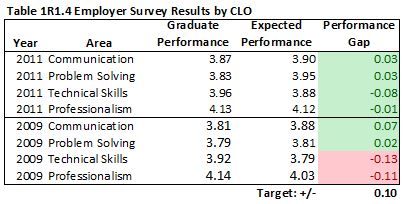

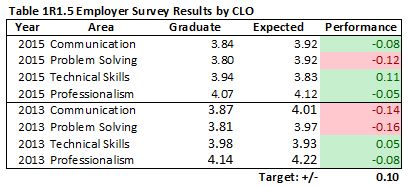

As part of its Employer Survey conducted every other year, Southeast Tech asks employers to rate its most recent graduates (past two years) in a variety of categories. These results, which are directly related to the four common learning outcomes, provide additional support regarding common learning outcome achievement and played a significant role in the Institute’s determination regarding the order for measuring the CLO’s.

Employers are asked to indicate what they expected the graduate performance level to be and the actual performance level for each area.

For Tables 1R1.3, 1R1.4, and 1R1.5, the first number by the CLO indicates the graduates’ actual performance level average. The second number indicates the employer’s expected performance level average. The number in parentheses indicates if the graduate exceeded expectations (+) or not (-). The Southeast Tech goal is for the gap between actual and expected performance to be within +/- .10.

In all three tables, Southeast Tech has found that the Institute is doing an excellent job of meeting employer needs as related to the CLO’s.

2015-2016 Analysis and Insights: Both Table 1R1.3 and Table 1R1.4 are discussed in the sections above in relation to the CLO’s. Results for both the 2013 and 2015 employer survey (Table 1R1.5) indicate a larger negative gap between actual and expected performance for the Problem Solving CLO (-0.14 and -0.12 respectively). While the overall graduate performance scores are similar or even higher than previous years, employer expectations have risen as well, causing Communication and Problem Solving to have a negative gap (-.14 and -.16 respectively) in spring 2013, and again in problem solving (-.12) in spring 2015. As part of the Strategic Plan (4P 2 ), the Institute will be gathering employer data on the CLO’s to assure that the CLO’s are still meeting industry needs. Additionally, in spring 2017 Southeast Tech initiated sector breakfasts to gather direct employer expectations of the Institute and its graduates to better meet their needs. Finally, the Institute conducted its Employer Survey again during spring/summer 2017.

During summer 2017, the Office of Institutional Research will 1. collect and develop a report of the Employer Survey data; 2. collect, with the Celebrating Learning Team, information from employers on the CLO’s and any additions/adjustments based on employer and internal stakeholder input, and 3. gather the data from the sector breakfasts. Once collected, all three will be presented to the CLT for potential review and revision of the CLO’s and to the External Stakeholders Relationships Team for improvements in services and educational offerings to industry (3P 3 ).

Add to Catalog Bookmarks (opens a new window)

|